Uploading Agent Data¶

If an agent needs to upload data to Insights Hub, an additional configuration is required to know how to interpret the agent's data stream. This configuration requires the following definitions:

- Data Source Configuration

- Property Set Provisioning

- Mapping for Data Source and Property Set

Preparing Data Upload¶

Creating a Data Source Configuration¶

Insights Hub needs a data source configuration for interpreting the data it receives from an agent. Without this configuration Insights Hub cannot understand the data. The data source configuration contains data sources and data points. Data sources are logical groups, e.g. a sensor or a machine, which contain one or more measurable data points, e.g. temperature or pressure.

Use the Agent Management Service to create the data source configuration as shown below:

PUT /api/agentmanagement/v3/agents/{agent_id}/dataSourceConfiguration HTTP/1.1

Content-Type: application/json

If-Match: etag

Authorization: Bearer eyx...

{

"configurationId": "string",

"dataSources": [

{

"name": "string",

"description": "string",

"dataPoints": [

{

"id": "string",

"name": "string",

"description": "string",

"type": "int",

"unit": "string",

"customData": {}

}

],

"customData": {}

}

]

}

| Parameter | Description | Remarks |

|---|---|---|

dataSource.name | Name of the data sources | Mandatory. Any string is allowed. |

dataSource.description | Description of the data sources | Optional. Any string is allowed. |

dataPoint.id | Data point ID | Mandatory. Must be unique per agent. No duplicates are allowed |

dataPoint.name | Name of the data point | Mandatory. Any string is allowed, e.g. pressure, voltage, current, etc. |

dataPoint.description | Description of the data point | Optional. Any string is allowed. |

dataPoint.type | Data type of the data point. By default, the following base types are provided: Double, Long, Int, String, Boolean | Mandatory |

dataPoint.unit | Unit of the data point | Mandatory. Any string is allowed, e.g. percent, SI, etc. Empty strings are allowed. |

dataPoint.customData | Custom data, if any will be provided | Optional |

dataSources.customData | Custom data, if any will be provided | Optional |

If successful, Insights Hub returns an HTTP response 200 OK with a JSON body that holds the created data sources configuration:

{

"id": "string",

"eTag": "2",

"configurationId": "string",

"dataSources": [

{

"name": "string",

"description": "string",

"dataPoints": [

{

"id": "string",

"name": "string",

"description": "string",

"type": "int",

"unit": "string",

"customData": {}

}

],

"customData": {}

}

]

}

Warning

When an existing Data Source Configuration is updated, all Data Point Mappings of the agent are deleted.

Note

For consuming exchange services the parameter configurationId needs to be provided to Insights Hub.

Creating a Data Point Mapping¶

Creating a data point mapping requires not only a data source configuration, but also a corresponding property set in Insights Hub.

Insights Hub needs a data point mapping for storing the data it receives from an agent. This maps the data points from the data source configuration to properties of the digital entity, that represents the agent. When Insights Hub receives data from an agent, it looks up which property the data point is mapped to and stores the data there.

Use the MindConnect API to create the data point mapping as shown below:

POST /api/mindconnect/v3/dataPointMappings HTTP/1.1

Content-Type: Application/Json

Authorization: Bearer eyc..

{

"agentId": "11961bc396cd4a87a9b26b723f5b7ba0",

"dataPointId": "DP0001",

"entityId": "83e78008eadf453bae4f5c7bef3db550",

"propertySetName": "ElectricalProperties",

"propertyName": "Voltage"

}

| Parameter | Description | Trouble Shooting |

|---|---|---|

agentId | String, validated, null-checked. Must exist in Agent Management. | HTTP 400: Agent is empty! HTTP 404: AgentId, {...} in the data point mapping does not exist! |

dataPointId | String, validated, null-checked. Must exist in Agent Management and belong to the specified agent's data source configuration. | HTTP 400: DataPointId is empty! HTTP 404: DataPointId, {...} in the data point mapping does not exist in the related agent! |

entityId | String, validated, null-checked. Must exist in IoT Entity Service. | HTTP 400: Entity ID is empty! HTTP 404: Not Found |

propertySetName | String, validated, null-checked. Must exist in IoT Type Service and belong to entity type of the specified entity. | HTTP 400: PropertySetName is empty! HTTP 404: Property set not found with name {...} HTTP 404: Entity type does not own a property set with name {...} |

propertyName | String, validated. Must exist in IoT Type Service and belong to the specified property set. | HTTP 400: PropertyName is empty! HTTP 404: Property set does not own a property with name {...} |

The following aspects have to be considered:

- Unit and type of data point and property should match.

- If the property does not have a unit (NULL), the data point unit should be empty string.

- The selected property cannot be static.

- One data point cannot be mapped to multiple properties with the same property name.

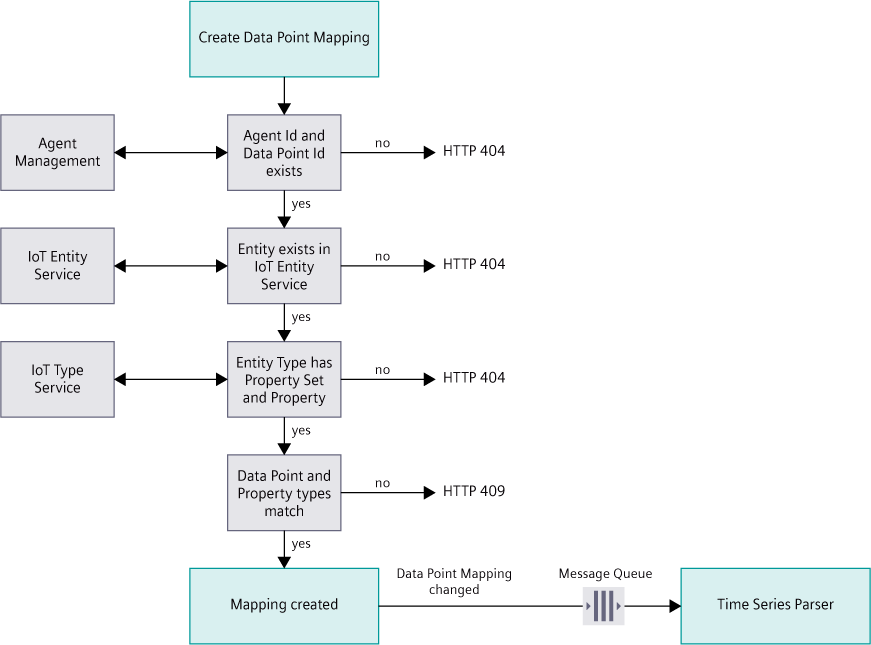

Before creating a data point mapping, the following checks are executed:

If successful, Insights Hub returns an HTTP responds 201 Created with a JSON body that holds the created mapping configuration. It only maps a single data point to a single property. If more mapping is needed, this step must be repeated.

{

"id": "4fad6258-5def-4d84-a4c2-1481b209c116",

"agentId": "11961bc396cd4a87a9b26b723f5b7ba0",

"dataPointId": "DP0001",

"dataPointUnit": "%",

"dataPointType": "INT",

"entityId": "83e78008eadf453bae4f5c7bef3db550",

"propertySetName": "ElectricalProperties",

"propertyName": "Voltage",

"propertyUnit": "%",

"propertyType": "INT",

"qualityEnabled": true

}

Warning

When an existing data source configuration is updated, all data point mappings of the agent are deleted.

Creating Event Mapping¶

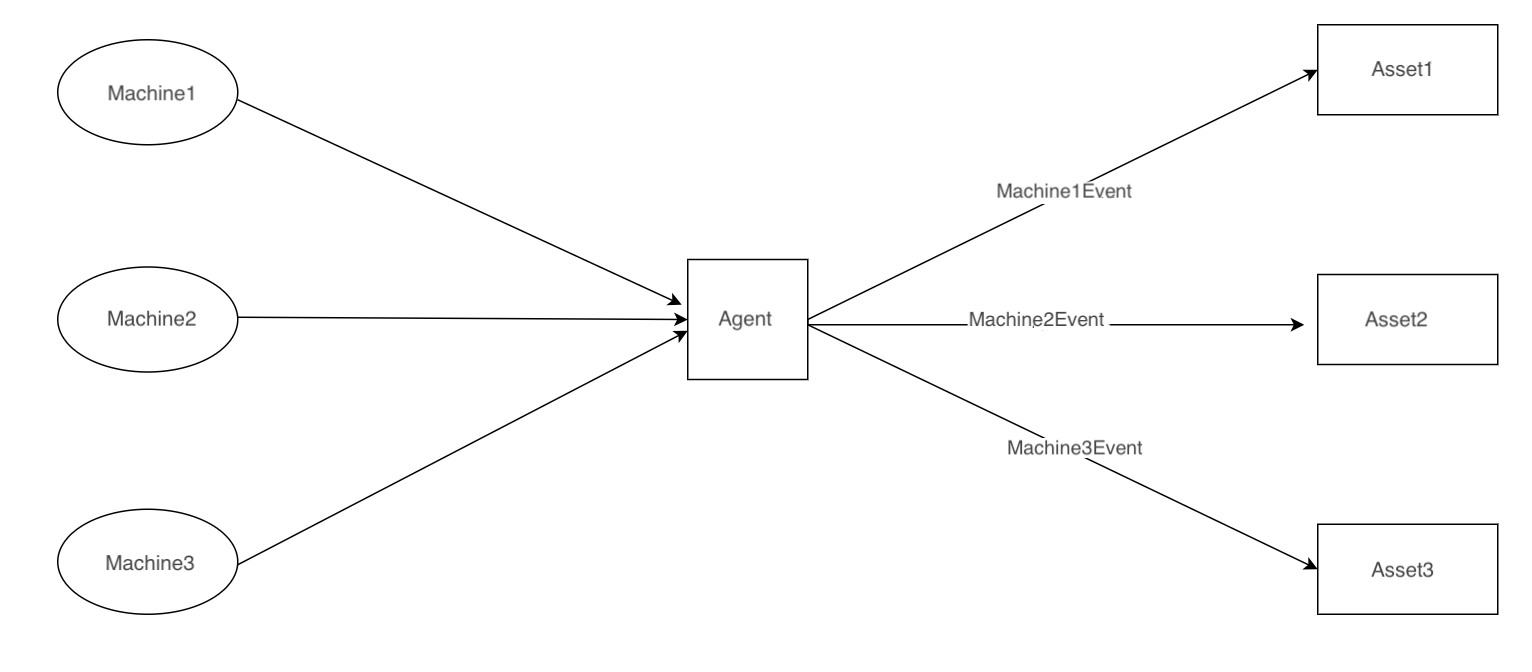

Events originating from the device in the field are sent to Insights Hub using MindConnect API. These events are stored in the corresponding agent asset. For example, if a field device is connected to Insights Hub over MCLIB agent, all the events originated from the field device is stored in the MCLIB (core.mclib type) agent. By using event mapping APIs from the agent, these events can now be mapped to appropriate asset in Insights Hub. The API allows you to define mapping criteria. For example, if the event type field source contains “MyMachine” then map the events to the asset (asset id field in the API). Once the mapping is performed, every time the event of the selected type reaches the agent, the event is automatically stored against the asset. If otherwise, the event remains in the agent itself.

- If there are no event mappings matching an event, that event will be stored in the agent asset.

- Multiple mappings can match for an event uploaded. In such a case, all matching mappings will be applied for the event.

- An asset can have multiple event mappings.

- Maximum of 50 event mappings can be created per agent.

- Maximum of 5 event mappings can be created per agent from an event type.

Before creating event mapping, please check following use case:

Use the MindConnect API to create the event mapping as shown below:

POST /api/mindconnect/v3/eventMappings HTTP/1.1

Content-Type: Application/Json

Authorization: Bearer eyc..

{

"agentId": "11961bc396cd4a87a9b26b723f5b7ba0",

"assetId": "2196dbc396cd4a87a9bd6b723fsb7baz",

"eventTypeId": "mytenant.connectivity.event.type.TestEventType",

"eventTypeName": "TestEventType",

"eventTypeFieldName": "source",

"eventTypeFieldValue": "Trumpfh1"

}

An event mapping can be updated using the MindConnect API eventMappings/id endpoint:

PATCH /api/mindconnect/v3/eventMappings/id HTTP/1.1

Content-Type: Application/Json

Authorization: Bearer eyc..

{

"agentId": "11961bc396cd4a87a9b26b723f5b7ba0",

"assetId": "2196dbc396cd4a87a9bd6b723fsb7baz",

"eventTypeId": "mytenant.connectivity.event.type.TestEventType",

"eventTypeName": "TestEventType",

"eventTypeFieldName": "source",

"eventTypeFieldValue": "Trumpfh1",

"eTag": "1"

}

The PATCH operation supports partial updates, meaning that if there is an update only for the eventTypeFieldValue, it is sufficient to include this field in the request, as shown in the following example request.

PATCH /api/mindconnect/v3/eventMappings/id HTTP/1.1

Content-Type: Application/Json

Authorization: Bearer eyc..

{

"eventTypeFieldValue": "Trumpfh2",

}

Uploading Data¶

The exchange endpoint of the MindConnect API provides the agent with the capability of uploading data to Insights Hub. This data can be of type:

- Time Series

- File

- Event

Agents require an access token with the mdsp:core:DefaultAgent scope to upload data.

The format conforms to a subset of the HTTP multipart specification, but only permits nesting of 2 levels.

Mandatory New Line

<CR><LF> at the end of a request represents a mandatory new line (Carriage Return and Line Feed). This representation is used for emphasis in the following samples.

Replace these characters with \r\n when uploading data to Insights Hub.

Uploading Time Series Data¶

Below is a sample request with a multipart message to upload time series data:

POST {{ _gateway_url }}/api/mindconnect/v3/exchange

Content-Type: multipart/mixed; boundary=f0Ve5iPP2ySppIcDSR6Bak

Authorization: Bearer access_token ...

--f0Ve5iPP2ySppIcDSR6Bak

Content-Type: multipart/related;boundary=penFL6sBQHJJUN3HA4ftqC

--penFL6sBQHJJUN3HA4ftqC

Content-Type: application/vnd.siemens.mindsphere.meta+json

{

"type": "item",

"version": "1.0",

"payload": {

"type": "standardTimeSeries",

"version": "1.0",

"details": {

"configurationId": "{{ _configuration_id }}"

}

}

}

--penFL6sBQHJJUN3HA4ftqC

Content-Type: application/json

[

{

"timestamp": "2017-02-01T08:30:03.780Z",

"values": [

{

"dataPointId": "{{ _datapoint_id_1 }}",

"value": "9856",

"qualityCode": "0"

},

{

"dataPointId": "{{ _datapoint_id_2 }}",

"value": "3766",

"qualityCode": "0"

}

]

}

]

--penFL6sBQHJJUN3HA4ftqC--

--f0Ve5iPP2ySppIcDSR6Bak--

<CR><LF>

The multipart message starts with the --f0Ve5iPP2ySppIcDSR6Bak identifier. It closes with the --f0Ve5iPP2ySppIcDSR6Bak-- identifier, which is equal to the starting identifier appended by a double dash. After accepting the structure of a multipart request, Insights Hub returns an HTTP response 200 OK with an empty body. Insights Hub validates and stores data asynchronously. The agent can continue uploading data as long as its access token is valid.

The multipart messages occur within this initial boundary. Each multipart message consists of metadata and a payload, therefore each message contains two boundary start identifiers and a single end identifier at the end:

--initial boundary

--boundary1

Metadata

--boundary1

Payload

--boundary1--

--initial boundary--

<CR><LF>

Note

The property configurationId in the metadata part of the multipart message tells Insights Hub how to interpret the data it receives (see section Creating a Data Source Configuration).

Uploading Files¶

Agents can upload files into Insights Hub, where they are stored in the respective asset. Within Insights Hub metadata and payload couples are referred as tuples. Each tuple may contain different type of data (for example one tuple may contain a specific timestamp data, whereas the other may contain octet/stream data). The example below contains an exchange payload for an octet/stream mime type:

POST {{ _gateway_url }}/api/mindconnect/v3/exchange

Content-Type: multipart/mixed; boundary=f0Ve5iPP2ySppIcDSR6Bak

Authorization: Bearer access_token ...

--f0Ve5iPP2ySppIcDSR6Bak

Content-Type: multipart/related;boundary=5c6d7e29ef6868d0eb73

--5c6d7e29ef6868d0eb73

Content-Type: application/vnd.siemens.mindsphere.meta+json

{

"type": "item",

"version": "1.0",

"payload": {

"type": "file",

"version": "1.0",

"details":{

"fileName":"data_collector.log.old",

"creationDate":"2017-02-10T13:00:00.000Z",

"fileType":"log"

}

}

}

--5c6d7e29ef6868d0eb73

Content-Type: application/octet-stream

... File content here ...

--5c6d7e29ef6868d0eb73--

--f0Ve5iPP2ySppIcDSR6Bak--

<CR><LF>

Uploading Events¶

Agents can upload events into Insights Hub, where they are stored in the respective asset. The events must be of an event type derived from type AgentBaseEvent. The following rules apply for uploading events:

- The fields

eventIdandversionare required in the payload. Allowed values for theversionare:MAJOR,MINOR. - The event type must exist in Event Management. Otherwise, the event is dropped.

- The severity is optional, but events with invalid severity values are dropped. Allowed integer values depend on the

versionof an event.version1.0: 1 (URGENT), 2 (IMPORTANT), 3 (INFORMATION)version2.0: 20 (ERROR), 30 (WARNING), 40 (INFORMATION)

Model of AgentBaseEvent

{ "id": "core.connectivity.event.type.AgentBaseEvent",

"name": "AgentBaseEvent",

"ttl": 35,

"parentId": "com.siemens.mindsphere.eventmgmt.event.type.MindSphereStandardEvent",

"fields": [

{

"name": "eventId",

"filterable": true,

"required": true,

"updatable": true,

"type": "STRING"

},

{

"name": "version",

"filterable": true,

"required": true,

"updatable": true,

"type": "STRING"

}

]

}

Here is a sample custom event type which is derived from AgentBaseEvent:

{

"name": "FileUploadCompletedEvent",

"parentId": "core.connectivity.event.type.AgentBaseEvent",

"ttl": 35,

"scope": "LOCAL",

"fields": [

{

"name": "fileNumber",

"filterable": true,

"required": false,

"updatable": true,

"type": "INTEGER"

},

{

"name": "fileSize",

"filterable": true,

"required": false,

"updatable": true,

"type": "DOUBLE"

},

{

"name": "fileName",

"filterable": true,

"required": true,

"updatable": true,

"type": "STRING"

},

{

"name": "isValid",

"filterable": true,

"required": false,

"updatable": true,

"type": "BOOLEAN"

}

]

}

Here is a sample request for event upload:

--FRaqbC9wSA2XvpFVjCRGry

Content-Type: multipart/related;boundary=5c6d7e29ef6868d0eb73

--5c6d7e29ef6868d0eb73

Content-Type: application/vnd.siemens.mindsphere.meta+json

{

"type": "item",

"version": "1.0",

"payload": {

"type": "businessEvent",

"version": "1.0"

}

}

--5c6d7e29ef6868d0eb73

Content-Type: application/json

[

{

"id": "7ba7b810-9dad-11d1-80b4-00c04fd430c8",

"correlationId": "fd7fb194-cd73-4a54-9e53-97aca7bc8568",

"timestamp": "2018-07-11T11:06:25.317Z",

"severity": 2,

"type": "FileUploadCompletedEvent",

"description": "file uploaded event",

"version": "1.0",

"details":{

"fileName": "test1",

"fileSize": 11.2,

"isValid": "true",

"fileNumber": 15

}

}

]

--5c6d7e29ef6868d0eb73--

--FRaqbC9wSA2XvpFVjCRGry--

<CR><LF>

Uploading Compressed Data¶

Agents can upload to compress data using .zip format. Sending large volumes of data proves to be costly for the end users from the time and cost perspective. An option to compress the data using .zip format, will reduce the consumption of data/bandwidth.

Below is a sample request with a multipart message to upload time series data as gzip format:

Python Example¶

import http.client

import gzip

conn = http.client.HTTPSConnection("gateway.eu1.mindsphere.io")

payload = '--FRaqbC9wSA2XvpFVjCRGry\r\nContent-Type: multipart/related;boundary=WtIIZS3TRqf70aZbLAX4cf\r\n\r\n--WtIIZS3TRqf70aZbLAX4cf\r\nContent-Type: application/vnd.siemens.mindsphere.meta+json\r\n\r\n {\r\n "type": "item",\r\n "version": "1.0",\r\n "payload": {\r\n "type": "standardTimeSeries",\r\n "version": "1.0",\r\n "details": {\r\n "configurationId": "configuration1"\r\n }\r\n }\r\n }\r\n--WtIIZS3TRqf70aZbLAX4cf\r\nContent-Type: application/json\r\n\r\n[{\r\n "timestamp": "2023-06-11T03:06:25.317Z",\r\n "values": [{\r\n "dataPointId": "dp1",\r\n "value": "12.2",\r\n "qualityCode": "00000000"\r\n },{\r\n "dataPointId": "dp2",\r\n "value": "test1",\r\n "qualityCode": "00000000"\r\n }\r\n ]\r\n },{\r\n "timestamp": "2023-06-11T04:07:25.317Z",\r\n "values": [{\r\n "dataPointId": "dp1",\r\n "value": "13.3",\r\n "qualityCode": "00000000"\r\n },{\r\n "dataPointId": "dp2",\r\n "value": "test2",\r\n "qualityCode": "00000000"\r\n }\r\n ]\r\n }]\r\n--WtIIZS3TRqf70aZbLAX4cf--\r\n--FRaqbC9wSA2XvpFVjCRGry--'

payload_bytes = bytes(payload, 'utf-8')

compressed_payload = gzip.compress(payload_bytes)

headers = {

'Authorization': ' Bearer access_token ...',

'Content-Type': 'multipart/mixed; boundary=FRaqbC9wSA2XvpFVjCRGry',

'Content-Encoding': 'application/gzip'

}

conn.request("POST", "/api/mindconnect/v3/exchange", compressed_payload, headers)

res = conn.getresponse()

data = res.read()

print(res.status)

print(data.decode("utf-8"))

JavaScript Example¶

const axios = require('axios');

const zlib = require('zlib');

let payload = '--FRaqbC9wSA2XvpFVjCRGry\r\nContent-Type: multipart/related;boundary=WtIIZS3TRqf70aZbLAX4cf\r\n\r\n--WtIIZS3TRqf70aZbLAX4cf\r\nContent-Type: application/vnd.siemens.mindsphere.meta+json\r\n\r\n {\r\n "type": "item",\r\n "version": "1.0",\r\n "payload": {\r\n "type": "standardTimeSeries",\r\n "version": "1.0",\r\n "details": {\r\n "configurationId": "configuration1"\r\n }\r\n }\r\n }\r\n--WtIIZS3TRqf70aZbLAX4cf\r\nContent-Type: application/json\r\n\r\n[{\r\n "timestamp": "2023-06-11T03:06:25.317Z",\r\n "values": [{\r\n "dataPointId": "dp1",\r\n "value": "12.2",\r\n "qualityCode": "00000000"\r\n },{\r\n "dataPointId": "dp2",\r\n "value": "test1",\r\n "qualityCode": "00000000"\r\n }\r\n ]\r\n },{\r\n "timestamp": "2023-06-11T04:07:25.317Z",\r\n "values": [{\r\n "dataPointId": "dp1",\r\n "value": "13.3",\r\n "qualityCode": "00000000"\r\n },{\r\n "dataPointId": "dp2",\r\n "value": "test2",\r\n "qualityCode": "00000000"\r\n }\r\n ]\r\n }]\r\n--WtIIZS3TRqf70aZbLAX4cf--\r\n--FRaqbC9wSA2XvpFVjCRGry--';

zlib.gzip(payload, (err, compressedPayloadBuffer) => {

if (err) {

console.error(err);

return;

}

let config = {

method: 'post',

url: 'https://gateway.eu1.mindsphere.io/api/mindconnect/v3/exchange',

headers: {

'Authorization': 'Bearer access_token ...',

'Content-Type': 'multipart/mixed; boundary=FRaqbC9wSA2XvpFVjCRGry',

'Content-Encoding': 'application/gzip',

},

data: compressedPayloadBuffer

};

axios.request(config)

.then((response) => {

console.log(JSON.stringify(response.status));

})

.catch((error) => {

console.log(error);

});

});

Replaying an Exchange Request¶

If Insights Hub cannot process data after it has been uploaded via MindConnect API, MindConnect's Recovery Service stores the unprocessed data for 15 calendar days. For a list of the stored unprocessed data, use the recoverableRecords endpoint. It responds with a page of recoverable records as shown below. The response can be filtered by the agentId, correlationId, requestTime and dropReason fields using a JSON filter.

{

"id": "4fad6258-5def-4d84-a4c2-1481b209c116",

"correlationId": "7had568-5def-4d84-a4c2-1481b209c116",

"agentId": "33238f98784711e8adc0fa7ae01bbebc",

"requestTime": "2018-08-27T16:40:11.235Z",

"dropReason": " <[Dropped] TimeSeries Data is dropped. Validation failed for reason <Pre-processing of <153000> data points ended with <60600> valid and <92400> dropped data points. Following issues found: Data point with no mapping count is <92400>, including <[variable101, variable102, variable103, variable104, variable105, variable106, variable107, variable108, variable109, variable110]>.>>"

}

To inspect a record's payload, the MindConnect API provides the /recoverableRecords/{id}/downloadLink endpoint. The response contains a URL, to which the data is uploaded by MindConnect API, e.g.:

GET /api/mindconnect/v3/recoverableRecords/{id}/downloadLink` HTTP/1.1

"https://bucketname-s3.eu-central-1.amazonaws.com/c9bcd-44ab-4cfa-a87e-d81e727d9af4?X"

If an invalid mapping or registration has caused the data not to be processed, the exchange request can be replayed after correcting the definition. Use the /recoverableRecords/{id}/replay endpoint of the MindConnect API to trigger a replay.

Note

If the data can successfully be processed after a replay, it is removed from the recovery system after 2 days.

Diagnosing Data Upload¶

Data uploaded via MindConnect API can be diagnosed with the Diagnostic Service feature. In order to activate an agent for the Diagnostic Service, the diagnosticActivations endpoint of the MindConnect API is provided, which needs to be used with following parameters:

POST /api/mindconnect/v3/diagnosticActivations HTTP/1.1

Content-Type: Application/Json

Authorization: Bearer eyc..

{

"agentId": "11961bc396cd4a87a9b26b723f5b7ba0",

"status": "ACTIVE"

}

| Parameter | Description | Trouble Shooting |

|---|---|---|

agentId | String, validated | HTTP 400: Agent is empty! HTTP 404: Agent id {...} exists! Agent id {...} could not be enabled. Because agent limitation is {...} for tenant {...}! |

status | String, validated Allowed inputs are: ACTIVE, INACTIVE, NULL. If NULL, value is set as ACTIVE by default. | HTTP 400: Custom statuses are not allowed. Use one of the allowed input values |

Note

The Diagnostic Service can be activated for up to 5 agents per tenant. Diagnostic activation statuses are set to inactive after 1 hour. Activations can be deleted by the diagnosticActivations/id endpoint.

A list of diagnostic activations is provided by the diagnosticActivations endpoint. It responds with a page of diagnostic activations as shown below. The response can be filtered by the agentId and status fields using a JSON filter.

{

"content": [

{

"id": "0b2d1cdecc7611e7abc4cec278b6b50a",

"agentId": "3b27818ea09a46b48c7eb3fbd878349f",

"status": "ACTIVE"

}

],

"last": true,

"totalPages": 1,

"totalElements": 1,

"numberOfElements": 1,

"first": true,

"sort": {},

"size": 20,

"number": 0

}

An activation can be updated or deleted using the diagnosticActivations/id endpoint:

PUT /api/mindconnect/v3/diagnosticActivations HTTP/1.1

{

"status": "INACTIVE"

}

| Parameter | Description | Trouble Shooting |

|---|---|---|

status | String, validated Allowed inputs are: ACTIVE, INACTIVE | HTTP 400: Custom statuses are not allowed. Status value must be one of: [ACTIVE, INACTIVE] |

Diagnostic data is listed by the diagnosticInformation endpoint. Its response is a page as shown below, which can be filtered by the agentId, correlationId,message,source,timestamp and severity fields using a JSON filter.

{

"content": [

{

"agentId": "3b27818ea09a46b48c7eb3fbd878349f",

"correlationId": "3fcf2a5ecc7611e7abc4cec278b6b50a",

"severity": "INFO",

"message": "[Accepted] Exchange request arrived",

"source": "TIMESERIES",

"state": "ACCEPTED",

"timestamp": "2018-08-27T16:40:11.235Z"

},

{

"agentId": "78e73978d6284c75ac2b8b5c33ffff57",

"correlationId": "39301469413183203248389064099640",

"severity": "ERROR",

"message": "[Dropped] Registration is missing. Data type is: notstandardTimeSeries"

"source": "TIMESERIES",

"state": "DROPPED",

"timestamp": "2018-08-27T16:40:11.235Z"

},

],

"last": true,

"totalPages": 1,

"totalElements": 2,

"numberOfElements": 2,

"first": true,

"sort": {},

"size": 20,

"number": 0

}

Diagnostic data of a given activation is provided by the /diagnosticActivations/{id}/messages endpoint. Its response is a page as shown below, which can be filtered by the correlationId,message,source,timestamp and severity fields using a JSON filter.

{

"content": [

{

"correlationId": "3fcf2a5ecc7611e7abc4cec278b6b50a",

"severity": "INFO",

"message": "[Accepted] Exchange request arrived",

"source": "TIMESERIES",

"state": "ACCEPTED",

"timestamp": "2018-08-27T16:40:11.235Z"

},

{

"correlationId": "39301469413183203248389064099640",

"severity": "ERROR",

"message": "[Dropped] Registration is missing. Data type is: notstandardTimeSeries"

"source": "TIMESERIES",

"state": "DROPPED",

"timestamp": "2018-08-27T16:40:11.235Z"

},

],

"last": true,

"totalPages": 1,

"totalElements": 2,

"numberOfElements": 2,

"first": true,

"sort": {},

"size": 20,

"number": 0

}

Related Links¶

Except where otherwise noted, content on this site is licensed under the Development License Agreement.