Configuring Azure Power BI tool with Integrated Data Lake¶

To configure Azure Power BI tool with Integrated Data Lake, follow these steps:

-

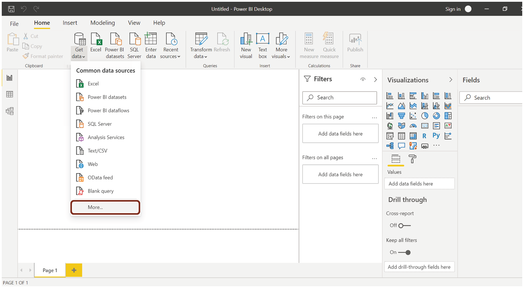

Open Power BI tool.

-

In Power BI tool, open "Get data" and select "More".

-

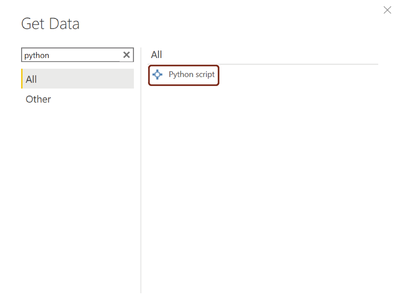

In Get Data screen, search for "python" and choose "Python script".

-

Click Connect.

-

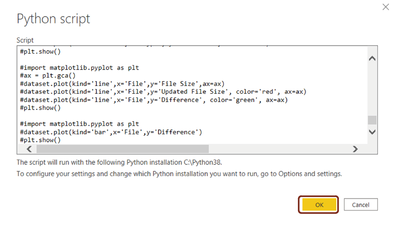

Configure the python script connector

- Sample Python Script to be used as given below:

Samplepythonscript

from pprint import pprint import os, uuid, sys from azure.identity import ClientSecretCredential from azure.storage.filedatalake import DataLakeServiceClient import pandas as pd import matplotlib.pyplot as plt from io import StringIO def load_file_in_powerbi(file_system_client): try: directory_client = file_system_client.get_directory_client(sampledata_directory) file_client = directory_client.get_file_client(sampledata_filename) download = file_client.download_file() downloaded_bytes = download.readall() bytes_string = str(downloaded_bytes, 'utf-8') data_stringio = StringIO(bytes_string) global data data = pd.read_csv(data_stringio) print(data) except Exception as e: print(e) def initialize_storage_account_ad(storage_account_name, client_id, client_secret, tenant_id): try: global datalake_service_client credential = ClientSecretCredential(tenant_id, client_id, client_secret) datalake_service_client = DataLakeServiceClient(account_url="{}://{}.dfs.core.windows.net".format("https", storage_account_name), credential=credential) except Exception as e: print(e) def get_file_system_client(file_system_name): try: file_system_client = datalake_service_client.get_file_system_client(file_system_name) return file_system_client; except Exception as e: print(e) def get_directory_client(file_system_client, directory_path): try: directory_client = file_system_client.get_directory_client(directory_path) return directory_client; except Exception as e: print(e) def upload_file_to_directory(directory_client, src_file_path,file_name): try: file_client = directory_client.create_file(file_name) local_file = open(src_file_path, 'r') file_contents = local_file.read() file_client.append_data(data=file_contents, offset=0, length=len(file_contents)) file_client.flush_data(len(file_contents)) except Exception as e: print(e) def list_directory_contents(file_system_client, directory_path): try: paths = file_system_client.get_paths(directory_path) return paths except Exception as e: print(e) def download_file_from_directory(directory_client, file_name): try: local_file = open(file_name, 'wb') file_client = directory_client.get_file_client(file_name) download = file_client.download_file() downloaded_bytes = download.readall() # bytes_string = str(downloaded_bytes,'utf-8') # data_stringio = StringIO(bytes_string) # data = pd.read_csv(data_stringio) # completedData = data.fillna(method='backfill', inplace=False) # data["completedValues"] = completedData["SMI missing values"] # pprint(data) # pprint(vars(data)) # pprint(data.head()) # data.plot(kind='bar', x='Day', y='completedValues', color='red') # plt.show() local_file.write(downloaded_bytes) local_file.close() except Exception as e: print(e) def download_hierarchy_directory(file_system_client, directory_path): try: directory_client = get_directory_client(file_system_client, directory_path) paths_list = list_directory_contents(file_system_client, directory_path) for path in paths_list: if path.is_directory == True: download_hierarchy_directory(file_system_client, path.name) else: # print(path.name + '\n') # pprint(vars(path)) path_split = path.name.rsplit("/", 2) if path_split[1] == directory_path.rsplit("/", 1)[1]: file_name = path_split[2] print(file_name + '\n') download_file_from_directory(directory_client, file_name); except Exception as e: print(e) `if __name__ == "__main__":` tenant_id = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx" client_id = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx" client_secret = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx" storage_account_name = "idltntprovisioningrc" file_system_name = "datalake-rc-punazdl" directory_path = "data/ten=punazdl/powerbi" sampledata_directory = "data/ten=punazdl/powerbi/sampledata" sampledata_filename = "file-size-upload.csv" initialize_storage_account_ad(storage_account_name, client_id, client_secret, tenant_id) file_system_client = get_file_system_client(file_system_name) paths = list_directory_contents(file_system_client, directory_path) directory_client = get_directory_client(file_system_client, directory_path) load_file_in_powerbi(file_system_client)

| Application ID | Location |

|---|---|

| tenant_id | Tenant ID will be available on the Service Principle page in Data Lake. |

| client_id | This is the application ID that can be copied from the Service Principle page in Data Lake. |

| client_secret | This is the Service Principle secret that was generated and copied. |

| sampledata_directory | Path on which Service Principle is generated. |

| sampledata_filename | File to be pulled into Azure Power BI. |

6.Click OK to load data into Azure Power BI.

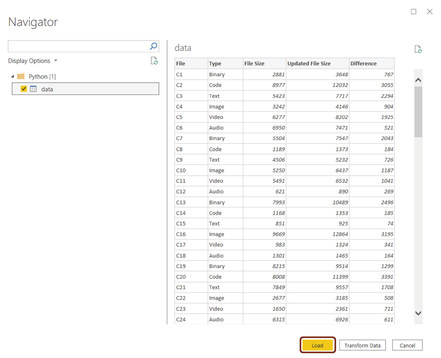

7.Click "Load".

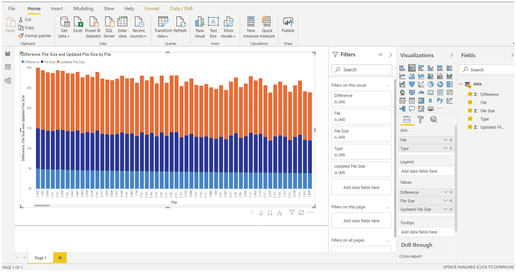

Result¶

The data is successfully loaded in Azure Power BI. You can now visualize your data in Azure Power BI.

Last update: February 13, 2024